BUMBA CLI – MODULAR

DESIGN FRAMEWORK

This case study documents an eight-month research journey building Bumba CLI 1.0—a multi-agent orchestration framework I developed alongside Claude as a deliberate exploration of what's possible when you systematically encode decades of product development knowledge into an agentic system. Working as a design engineer without formal software engineering training, I approached this as much as a learning project as an engineering challenge, building sophisticated patterns for agent coordination, cost optimization, and hierarchical task distribution that mirrors how real product teams function. The framework grew ambitious—spanning 180,000 lines across agent orchestration, design-to-code pipelines, voice interaction, and workflow automation—but that ambition served a purpose: understanding multi-agent systems deeply enough to identify which patterns represent genuine innovations worth preserving. As the project matured, I recognized that completing this vision to production quality was impossible alone, but the knowledge accumulated was invaluable. The strategic pivot wasn't abandoning the work—it was extracting Bumba's most powerful architectural patterns and reimagining them as Claude Code-native skills, plugins, and agents. This transformation reflects an evolution in thinking: AI development tools should be modular, composable, and accessible, not monolithic frameworks. What began as a moonshot attempt to build my own orchestration system became a systematic exploration of how human expertise translates into agentic intelligence, and how those learnings can benefit the broader Claude Code community through shared, reusable components.

[NO TIME TO READ?]

Listen to the podcast

0:000:00

[YEAR]

2025-2026

[CLIENT]

Owned Product

[TOOLS]

Figma, Claude Code

[TYPE]

AI System Design

1.0

BUMBA CLI & MODULAR

DESIGN FRAMEWORK

Over eight months, I built Bumba CLI 1.0—a multi-agent orchestration framework developed alongside Claude to explore what's possible when you encode decades of product development knowledge into an agentic system. Working as a design engineer without formal software training, I approached this as much a learning project as an engineering challenge. The framework grew ambitious—spanning 180,000 lines across agent orchestration, design-to-code pipelines, voice interaction, and workflow automation—but as the project matured, I recognized that completing this vision to production quality was impossible alone. The strategic pivot wasn't abandoning the work—it was extracting Bumba's most powerful architectural patterns and reimagining them as Claude Code-native skills, plugins, and agents. What began as a moonshot became a systematic exploration of how human expertise translates into agentic intelligence. *Full case study on desktop.

[YEAR]

2025-2026

[CLIENT]

Own Product

[TOOLS]

Figma, Claude Code

[TYPE]

AI System Design

[NO TIME TO READ?]

Listen to the podcast

0:000:00

[THE CHALLENGE]

The modern workforce is undergoing a seismic shift, and I wanted to understand it by building something ambitious. As a design engineer with 20 years of product development experience but no formal software training, I saw AI reshaping how we work and wondered: could I transform decades of hard-earned knowledge about orchestrating human teams into a framework that orchestrates AI agents instead? This wasn't about claiming mastery of some new domain—it was about genuine curiosity and a willingness to spend eight months learning through building. The question that drove me was deeply personal: if the future economy favors individuals who can wield AI to become their own businesses, could I develop both the technical capability and systematic approach to make that real? Building Bumba became my research project—a way to encode product development operations from strategy to execution into an agentic system I could control and evolve, testing whether the patterns I'd learned orchestrating designers, engineers, and product managers could translate into intelligent agent coordination. The challenge wasn't just technical sophistication; it was creating something flexible enough to grow alongside my understanding, knowing I'd make mistakes, over-engineer parts, and need to course-correct as both the technology and my own knowledge matured.

The project started with exploration and collaboration. After two years studying AI with a group of engineers, I connected with Koshi Mazaki—a brilliant new media artist, creative director and engineer. We spent one month experimenting with custom frameworks, and comparing notes before Koshi moved on to other projects, and I continued alone for the next eight months with an admittedly ambitious goal: build a multi-agent system structured like an actual design studio, with five operational departments—Engineering, Product Strategy, QA & Testing, Operations, and Design—each with specialized workflows. The idea was straightforward even if the execution wasn't: if companies were replacing human teams with AI, could I build a framework as capable as a modern design studio? What made this possible wasn't formal engineering training—I earned the title of design engineer through practice, learning by building. Creating Bumba meant countless conversations with Claude, translating 20 years of product development knowledge into working code through iteration, debugging, and more than a few late nights questioning whether this was even possible. During the early days of this project, I found myself often wrestling with the earlier Claude models - during one of those early sessions the first Opus model took unsanctioned liberty to overwrite some of my solid work - I exclaimed 'BUMBA-CLAUDE!' and in that moment this unusual brand identity idea was born. The result was a sophisticated CLI with interactive dialogues for granular control, orchestrating specialized agents, tool connections, and complex workflows—each feature reflecting hard-won lessons about how teams actually collaborate, now encoded into an agentic system that could handle the full spectrum of tasks a professional design studio tackles.

[BUMBA CLI WALKTHROUGH]

[FIRST IMPRESSION]

Installer screen sets expectations that Bumba is a professional orchestration framework, not a simple command wrapper.

[BRAND IDENTITY]

Signature gradient and typography establish visual consistency that carries through the entire CLI experience, from installation through daily use.

[INSTALL DISPLAY]

Feature categories highlighted upfront: Multi-Agent Intelligence, 60+ Commands, Enterprise Quality, Advanced Integrations.

[ARCHITECTURE]

Essential onboarding info in digestible chunks: tagline, version, features by category, and integrations.

[HIERARCHICAL]

Five operational departments displayed with color-coded badges establish clear mental models before users interact with any commands.

[COGNITIVE LOAD]

Progressive disclosure strategy presents core capabilities without overwhelming newcomers with 60+ command options immediately.

[SETUP WIZARD]

One of the first things I learned building Bumba was that complex tools fail if they're hard to start using. The setup wizard emerged from recognizing that asking developers to manually edit configuration files or hunt down API keys from multiple providers is a recipe for abandoned installations. I wanted Bumba to feel approachable from the first interaction, so the wizard became a guided conversation rather than a technical gauntlet: detecting existing configurations, backing up previous setups if they exist, collecting API keys for multiple AI providers (OpenAI, Anthropic, Google, OpenRouter), configuring MCP servers for Claude integration, setting up the Universal Tool Bridge, testing all connections in real-time, and saving everything to the right locations. The system validates at every step, provides hints with direct links to where you can actually get your API keys (because I kept forgetting those URLs myself), offers project templates for common use cases, and adapts whether you're installing fresh or upgrading. It's not revolutionary—it's just thoughtful infrastructure work that removes friction, the kind of detail you notice when it's missing and appreciate when it's done well. Getting this right meant developers could focus on building with AI rather than fighting configuration errors, which felt like the most honest form of respect for anyone willing to try Bumba.

[STEP-BY-STEP]

Interactive walkthrough establishes basic project settings, from configuration detection to final setup.

[MULTI-MODEL]

Add API keys for multiple AI providers (OpenAI, Anthropic, Google, OpenRouter) to power multi-agent orchestration.

[READY-TO-USE]

Wizard completion generates ENV file and folder structure, ready for immediate Bumba CLI development.

[AGENT ORCHESTRATION]

Building the agent orchestration system forced me to think deeply about how human teams actually work—not the idealized org charts, but the messy reality of how designers, engineers, and product managers collaborate under pressure. I structured Bumba around five department chiefs (Product Strategy, Engineering, Design, Quality Assurance, Operations), each commanding seven specialized agents, creating a 40-agent ecosystem that mirrors real design studios I've worked in. This wasn't some arbitrary number; it reflected actual team structures I'd seen succeed and fail over 20 years. Each chief operates with domain expertise and can spawn specialists as needed—the Product Strategy Chief deploying Market Researchers and Requirements Engineers, the Engineering Chief commanding Backend Developers and DevOps experts—enabling workflows where strategic decisions flow from chiefs to specialists and results aggregate back up for synthesis. The orchestration layer implements six task distribution strategies I discovered through trial and error: round-robin for simple load balancing, capability-matched for routing to specialists with relevant skills, performance-based for assigning work to historically successful agents, priority-first for critical path execution, dependency-aware for complex task graphs, and load-balanced for equalizing workload. The Master Orchestrator serves as executive intelligence, analyzing requests, determining optimal strategies, and coordinating cross-department collaboration while maintaining memory of what worked before. Resource management uses dynamic pooling—chiefs stay persistent as coordination points while specialists spawn on-demand and retire after completion—letting the system run 3-5 agents concurrently with built-in resilience, automatic retry logic, and graceful degradation. Getting this right took months of debugging coordination failures and rethinking assumptions about how AI agents could collaborate, but eventually it transformed what could have been chaotic into something that genuinely felt like orchestrating a professional team rather than just firing off API calls.

[COST OPTIMIZATION]

Early in development, I made a sobering discovery: running 40 AI agents without cost optimization would bankrupt most individual developers within days. This realization led to building aggressive cost intelligence throughout the orchestration architecture, prioritizing free-tier models (Google Gemini, DeepSeek, Qwen) for appropriate tasks while reserving paid models (Claude, GPT-4) for genuinely complex operations that justified the expense. The Free Tier Manager tracks usage across providers in real-time, maximizing free API quotas before touching paid services—a pattern I developed after accidentally burning through $200 in a single testing session and realizing this couldn't scale. The Cost-Optimized Orchestrator analyzes each task's complexity, urgency, and requirements to route intelligently: simple tasks to Gemini Pro, medium-complexity work to DeepSeek or Qwen variants, complex operations to premium models only when necessary. The system maintains detailed cost metrics tracking executions, free versus paid ratios, actual costs, and money saved, while offering multiple execution strategies (free-only, free-first, balanced, quality-first) so users control the cost-quality tradeoff based on real constraints. This wasn't theoretical optimization—it was survival economics, and the architecture routinely achieves 3-5x cost savings compared to naive "always use the best model" approaches. Getting this right made sophisticated multi-agent workflows economically viable for individual developers and small teams, transforming Bumba from an interesting experiment only venture-backed companies could afford into something practitioners could actually use daily.

[COMMAND LINE INTERFACES]

Building command-line interfaces taught me that terminal users expect something fundamentally different than GUI users—efficiency and control without visual decoration or hand-holding, but also clarity when facing complex decisions. Drawing from 20 years watching professionals work, I knew Bumba's interactive dialogues needed to respect expertise while guiding users through genuinely complex operations: connecting MCP servers, managing API credentials across multiple providers, and building multi-agent workflows. Rather than forcing users into configuration files or cryptic flags, I designed three core dialogues following progressive disclosure principles—start with essential choices, reveal complexity only when needed, provide clear context at decision points, validate immediately, and always show a path forward. The MCP Manager lets users browse available Model Context Protocol servers, configure connections, test in real-time, and toggle servers without editing JSON. The API Manager guides credential management across OpenAI, Anthropic, Google, and OpenRouter, testing authentication immediately and securely storing keys. The Workflow Builder transforms automation design into step-by-step guidance where users define objectives, select agent teams, configure parameters, set up conditional logic, test before deployment, and save reusable templates. These aren't just terminal forms—they're conversations that make sophisticated capabilities accessible without requiring users to become Bumba experts, recognizing that the best tools disappear into workflow rather than demanding constant attention to their own complexity.

[MCP MANAGER]

Interactive manager that enables users to essentially enable and disable their MCP servers directly from command line.

[API MANAGER]

Interactive manager that enables users to essentially enable and disable model APIs directly from command line.

[WORKFLOW BUILDER]

Interactive dialog that enables users to create workflows as an instruction layer agents can later reference.

[BUMBA CHAT INTERFACE]

The `bumba chat` command became my attempt to create a conversational interface similar to Claude Code and Gemini CLI while respecting terminal constraints—no graphical distractions, no unnecessary chrome, just clean interaction that keeps users in flow. I quickly learned that terminal responsiveness is deceptively hard: unlike web interfaces where CSS handles layout automatically, terminal elements like divider lines and ASCII tables must be manually redrawn when users resize windows, requiring careful event handling I initially underestimated. An even bigger struggle was managing logging output—multi-agent systems generate enormous diagnostic information, and early chat versions drowned in noise, making the interface unusable. Through iteration, I reduced logging to essential minimums and discovered a solution that actually enhanced experience rather than just reducing problems: surfacing the active agent team as they're called for each task. Agents appear color-coded by department (green for Engineering, yellow for Strategy, blue for Design, orange for QA, red for Operations), giving users real-time transparency into which specialists handle their requests without overwhelming them with implementation details. This wasn't brilliance—it was necessity born from watching test users get confused about what Bumba was actually doing. The result feels professional and responsive while being uniquely Bumba, showing just enough orchestration to build trust while keeping focus on getting work done rather than admiring the machinery.

[CHAT DESIGN]

View when Bumba Chat loads which provides some branding, an input section, and a status line which was intended to reflect token usage.

[MULTI AGENT]

When a text prompt is added the task is routed to an appropriate department chief that spawns sub-agents.

[OVER-ENGINEERING AS RESEARCH]

Building Bumba was fundamentally a research project, and I understood that from the beginning even if the scale surprised me later. Over eight months working alongside Claude, I created more than 2,000 JavaScript files totaling nearly 180,000 lines of code across 150+ core modules—more code than many production applications, spanning agent orchestration, cost optimization, memory systems, Notion integration, Figma bridges, monitoring infrastructure, and dozens of other subsystems. This was a moonshot, and I poured everything into it: countless late nights debugging orchestration failures, redesigning architectures, having conversation after conversation with Claude while pushing toward a vision that felt simultaneously impossible and essential. I knew I was over-engineering. A solo design engineer with no formal software training had no business attempting to build a production-grade multi-agent framework rivaling tools from well-funded startups. But the pursuit of knowledge was the point—I wanted to understand multi-agent systems deeply enough to wrestle with hard problems that don't have obvious solutions. How do you prevent agent spawning from spiraling out of control? How do you route tasks intelligently across 40 specialists? How do you make sophisticated AI orchestration economically viable? Each module represented a question I needed to answer through building, not reading about. The framework forced me to think through hierarchical command structures mirroring real organizations, implementing six task distribution strategies, building cost intelligence maximizing free-tier usage, creating responsive terminal interfaces, managing logging without drowning users in noise, and architecting resource pooling that dynamically spawns and retires agents. I emerged with knowledge I couldn't have gained any other way—not from tutorials or documentation or conference talks. You only truly understand these systems by building them, breaking them, fixing them, iterating relentlessly.

As the codebase grew, reality became undeniable: completing this vision to production quality was impossible alone. The scope was too vast, technical debt accumulating, maintenance burden unsustainable. But rather than viewing this as failure, I recognized it as the natural conclusion of a successful research phase—I had achieved what I set out to do, gaining deep expertise in multi-agent orchestration. The question became: what do I do with this knowledge? Continuing to build a standalone framework made less sense than extracting the most valuable patterns and reimagining them for Claude Code, where they could benefit a broader community without requiring adoption of yet another CLI tool. This pivot wasn't abandoning Bumba; it was evolving the insights into something more sustainable, accessible, and ultimately more impactful—transforming eight months of solo research into reusable components for the broader Claude Code ecosystem.

[EXTRACTING PRIMITIVES & COMPONENTS]

The extraction process required honest assessment about what actually mattered in Bumba's massive codebase—separating genuine innovations worth preserving from over-engineered complexity I'd built while learning. I approached this systematically, auditing each of the 150+ core modules with a simple question: does this solve a fundamental problem in multi-agent orchestration that others will face, or did I just build it because I could? The goal was creating a library of reusable primitives—building blocks that could be repurposed for future multi-agent systems or integrated into Claude Code as native skills, plugins, and agents. These weren't code snippets to copy-paste; they were distilled patterns representing months of research, iteration, and hard-won understanding about what actually works in production-scale AI orchestration, lessons learned through building, breaking, and rebuilding until the patterns proved themselves. These primitives became the foundation for everything that followed. By isolating core innovations from Bumba's complexity, I created a toolkit that could be applied flexibly—whether building new multi-agent systems from scratch or extending Claude Code with sophisticated orchestration capabilities. Each component represented a solved problem, not theoretical architecture: cost optimization strategies that actually work after burning through API budgets, task distribution algorithms proven through real usage and failure, terminal interface patterns that respect user workflow because I watched users struggle with early versions, and orchestration hierarchies that mirror how teams actually function based on 20 years observing what works and what doesn't. This extraction wasn't just about code reuse; it was about capturing the architectural thinking behind Bumba in a form others could understand, adopt, and build upon without needing to spend eight months learning the same hard lessons. The primitives made knowledge transferable, transforming solo research into reusable solutions for the broader AI development community.

[SYSTEMS]

1.AI MODEL GATEWAY

2. UNIVERSAL TOOL BRIDGE

3. AGENT COORDINATION

4. AGENT LIFECYCLE

5. MEMORY MCP

6. SYSTEM OBSERVABILITY

7. COMMAND ROUTING

+MORE

[PRIMITIVES]

ADAPTIVE PLANNER

2. AGENT FACTORY

3. ERROR RECOVERY

4. FILE LOCKING

5. HEALTH MONITOR

6. TOKEN COST MANAGER

7. UNIFIED MEMORY

+MORE

BUMBA

BUMBA

BUMBA

BUMBA

BUMBA

BUMBA

[HALF-WAY CHECKPOINT]

How could you ignore me

0:000:00

ZED GRAPHICAL EDITOR

[A NOTE ABOUT THE ZED IDE]

After experimenting with popular AI-enabled IDEs like Cursor, VS Code, and Windsurf, I found myself gravitating toward Zed for reasons that traced back to how I learned to work with AI agents—through the terminal first, spending months in command-line interfaces before ever using a graphical editor. Zed felt like a natural middle ground between the terminal comfort I'd developed and the practical benefits of an IDE: lightweight, terminal-native in philosophy, and designed for developers who value speed over feature bloat. The Rust-based architecture delivering near-instant project loading, built-in collaboration that felt native rather than bolted-on, and a minimalist interface keeping focus on code rather than chrome—all of this resonated with how I actually wanted to work. I recognize this makes me somewhat unusual as a designer; many of my peers prefer feature-rich IDEs that provide more guidance and structure, which is completely valid. I just found I didn't need that handholding after months living in terminal environments, and Zed honored that preference without sacrificing the conveniences an IDE provides. But as someone who's spent 20 years obsessing over visual details, I couldn't settle for default themes. Working with Claude, we experimented with color schemes and syntax highlighting, iterating on contrast ratios, readability at different times of day, and visual fatigue during long sessions. We ultimately landed on a signature dark theme with a distinctive red cursor highlight—the Bumba theme for Zed. It's minimal, easy on the eyes during extended work, and reflects the same design sensibility that shaped every aspect of the Bumba CLI: intentional, refined, and built for serious use.

[A NOTE ABOUT THE ZED IDE]

The development process started by gathering example Zed theme JSON files for Claude to study, establishing the technical structure and configuration patterns theme creation required. I provided the Bumba color palette and brand design references, creating clear mapping between the established visual identity and the new IDE theme implementation. Through multiple iterations refining syntax highlighting, UI chrome colors, and cursor treatments, we converged on the final minimal dark theme that became the signature Bumba aesthetic for Zed.

FORTY THIEVES AGENT TEAM

[AGENT TEAM EXTRACTION]

One of the most significant extractions from Bumba was the agent team architecture—a system I call the Forty Thieves—which required reimagining the entire organizational structure for Claude Code's simpler, more elegant approach. The original Bumba framework modeled agents after professional product studios I'd worked in: five departments (Engineering, Design, Product Strategy, QA & Testing, Operations), each with a chief overseeing seven specialists, creating a 40-agent ecosystem mirroring how real teams collaborate and divide work. Building these for Claude Code required fundamentally rethinking my approach—in Bumba, complex JavaScript orchestration managed agent spawning, task routing, resource allocation, and state coordination, but Claude Code's native agent system eliminated that entire infrastructure layer, letting agents be pure markdown files Claude interprets directly. The challenge shifted from managing execution to encoding expertise effectively. Working with Claude through countless iterations, we developed a consistent markdown structure for all 40 agents, each following the same architectural pattern: YAML frontmatter defining identity (name, description, department color), followed by sections establishing core expertise, Claude Code integration guidance, methodology frameworks, output templates, language-specific implementations, situational boundaries, and professional philosophy. The methodology frameworks represent the real knowledge transfer—20 years of product development experience made explicit and accessible, with the Backend Architect encoding SOLID principles and implementations across seven programming languages, the UX Researcher bringing interview protocols and usability testing frameworks, the DevOps Engineer providing CI/CD patterns and monitoring strategies. This extraction transformed what took eight months to build in Bumba into something anyone using Claude Code can invoke without installing frameworks or managing configurations—the agents simply exist as markdown files in `.claude/agents/`, ready to provide specialized expertise across the full spectrum of product development while preserving the hierarchical intelligence without the implementation complexity.

Learn more here:

[BUILDING AGENTS]

Through experimentation, I learned that each of the 40 agent markdown files needed a repeatable structure to work effectively: YAML frontmatter establishing identity (name, description, department color), followed by sections encoding core expertise, methodology frameworks with actionable checklists, output templates, language-specific implementations, and situational boundaries.

PROJECT-INIT & GITHUB-TO-NOTION

[PROJECT INITIALIZATION]

The project-init feature provides a streamlined onboarding flow for setting up GitHub-backed projects with integrated Notion dashboards. When invoked, it leverages the global `/project-init` command architecture, detecting the BUMBA-Notion plugin through hooks and automatically scaffolding a complete project workspace. The system creates a hierarchical structure in Notion (Projects → Epics → Sprints → Tasks) with bidirectional GitHub synchronization, storing project metadata locally in `~/.claude/plugins/bumba-notion/state/` and optionally in bumba-memory MCP for cross-session persistence. This one-time setup establishes the foundation for continuous GitHub issue tracking, milestone management, and dependency-aware task orchestration without requiring manual Notion database configuration.

[PROJECT-INIT WALKTHROUGH]

[GITHUB-TO-NOTION SYNC WITH DEPENDENCY TRACKING]

The `/gh/sync-notion` command implements intelligent two-pass synchronization that transforms GitHub issues into structured Notion tasks while preserving dependency relationships. The system parses issue body text for dependency syntax ("Depends on #N", "Blocked by #N", "Requires #N"), creates all tasks in a first pass, then links dependencies in a second pass using self-referencing Notion relations. This enables a formula-driven "Ready Queue" that automatically surfaces only unblocked work—tasks with no dependencies or all dependencies completed—preventing premature assignment of blocked tasks. The sync includes pre-execution status display (last sync time, freshness indicators), post-execution summaries (created/skipped/error counts), retry logic for API rate limits, and complete sync history tracking, creating a production-ready workflow bridge between GitHub's issue tracking and Notion's project management capabilities.

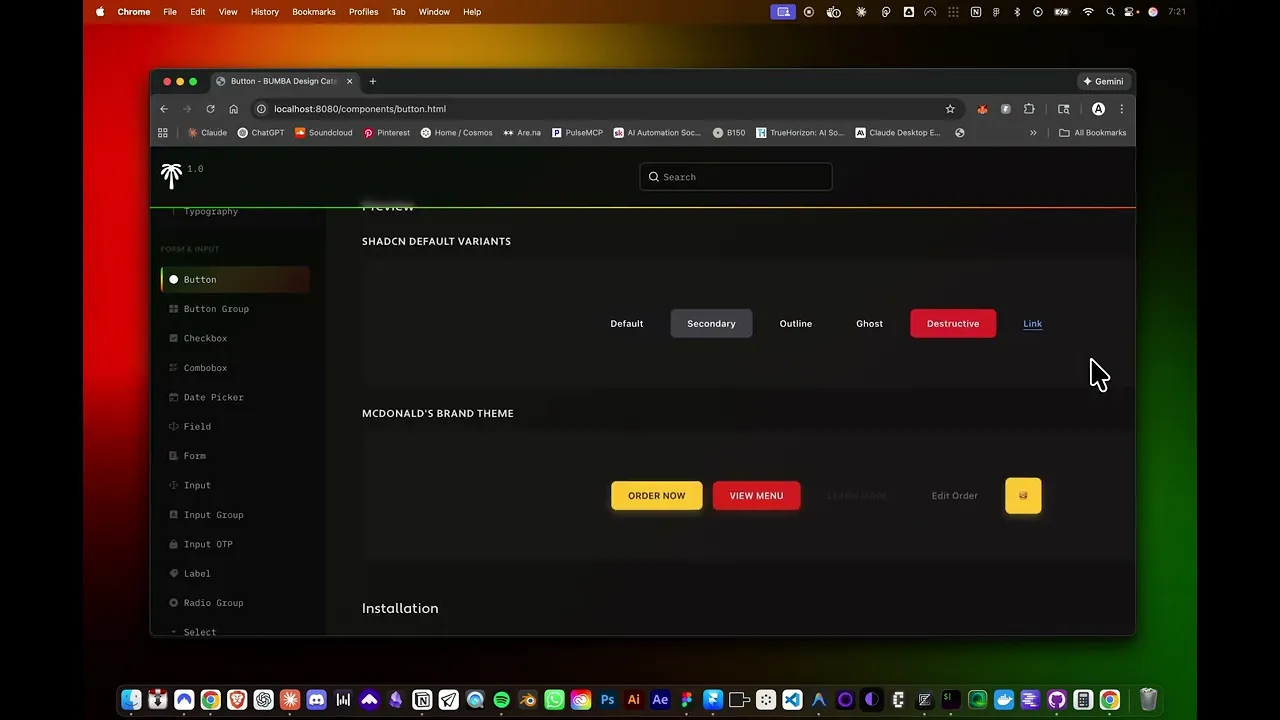

DESIGN-INIT COMMAND

[DESIGN ORGANIZATION]

One of the most powerful extractions from Bumba became the `design-init` command—a sophisticated initialization system I built after watching developers struggle with the tedious setup required for design-to-code workflows. Rather than forcing developers to manually create directories, configure build tools, or wire up complex toolchains, design-init implements an intelligent two-phase architecture: an interactive configuration phase where Claude gathers project context (framework choice, TypeScript usage, output paths, Storybook preferences, layout extraction settings), followed by an automated execution phase handled entirely by hooks. The command detects existing project patterns—identifying Next.js configurations, TypeScript setups, or prior design structures—and adapts its recommendations accordingly, creating a personalized onboarding experience that respects what already exists. Upon completion, design-init generates a complete `.design/` directory hierarchy containing token registries, component definitions, extracted code paths organized by framework, a BUMBA Design Catalog for visual exploration, optional Storybook configuration with custom theming, and comprehensive documentation. This isn't just scaffolding; it's the operational infrastructure I wish I'd had when starting Bumba, enabling bidirectional design-code synchronization across 10 frameworks (React, Vue, Angular, Svelte, Flutter, SwiftUI, Jetpack Compose, React Native, Next.js, Web Components), letting teams extract design tokens from Figma, transform them into production-ready code, and maintain cascade sync that preserves manual modifications even as designs evolve. The command emerged from months of wrestling with real-world design system challenges, distilled into a single invocation that delivers what used to require hours of manual configuration—making sophisticated design-to-code workflows accessible to any development team using Claude Code.

[DESIGN INIT WALKTHROUGH]

[SETUP DIALOG]

Initially the /design-init command solicits information from the user in order to effectively setup the working directory to facilitate design work.

[SPECIFY LANGUAGES]

A large portion of the /design-init setup is dedicated to establishing the design to code languages.

[ADD FEATURES]

The system will also facilitate the setup of supporting assets like auto sync, storybook, and design catalog.

DESIGN DIRECTOR WORKFLOW

[BUILDING DESIGN SPECIFICATIONS]

While building the BUMBA Design ecosystem, I discovered [Design OS] Brian Casel's brilliant product planning framework that bridges the gap between product vision and codebase implementation. His system provides a React-based application where teams work through structured planning phases: defining product vision, modeling data entities, choosing design tokens, and creating screen designs before exporting a complete handoff package for developers. I was impressed by how Design OS formalized the messy early planning work that often gets skipped, but I wanted something more aligned with the CLI-first, Claude Code-native philosophy I'd been building with BUMBA. So I adapted Brian's approach into Design Director—a command-line questionnaire system that guides users through similar rigorous planning without requiring a separate React application. Where Design OS presents an interactive web interface, Design Director implements the workflow as a conversational CLI experience through terminal interactions that feel natural when working alongside Claude, asking targeted questions, generating markdown specifications, creating TypeScript type definitions, and building exportable packages. The key difference is that Design Director doesn't generate unique visual design specifications—instead, it references the BUMBA Design Registry containing design tokens and components already extracted from Figma, allowing specifications to point to existing design system assets rather than defining new visual designs from scratch. This integration with the BUMBA Design System infrastructure means Design Director reads extracted design tokens from `.design/tokens/`, references available components from `.design/components/`, and understands framework preferences from `.design/config.json` to generate specifications that align with actual design system assets already established in the project. BUMBA Design creates tangible assets (tokens, components, layouts), while Design Director creates specifications (vision documents, data models, user flows, implementation instructions)—complementary tools working together rather than competing approaches. The questionnaire can be completed in a single 30-60 minute session via the `/design-director-run` command, or tackled incrementally using individual commands for each phase, exporting a comprehensive implementation package to `.design/bumba-design-director/design-direction-plan/` containing ready-to-use prompts for coding agents, milestone-by-milestone implementation instructions, TypeScript type definitions auto-generated from data models, sample data for testing, and direct references to BUMBA Design assets. The workflow systematically guides you through seven distinct phases:

[PROCESS STEPS]

1. PRODUCT VISION

PRODUCT ROADMAP

3. DATA MODEL

SHELL SPECIFICATION

SECTION SPECIFICATION

SAMPLE DATA GENERATION

SCREEN SPECIFICATION

EXPORT PACKAGE

[CLI IMMEDIACY]

As was mentioned within the earlier written portion of this case study, this feature is an adaptation of Brian Casel's DESIGN OS system. The adaptation to a CLI experience removes some of the friction that comes along with running a separate app. I adapted the system to work in consideration of extracted Design System assets if they exist within the directory.

[DESIGN DIRECTOR CONTD]

Design Director generates design-focused specifications that work alongside traditional engineering specifications like PRDs (Product Requirements Documents). What I learned building this was that product development actually needs two complementary specification sets: engineering-focused specs covering backend architecture, APIs, and data flow (typically derived from PRDs), and design-focused specs covering user flows, interface requirements, and visual implementation (what Design Director generates). The outputs include markdown specifications capturing product vision and section requirements, auto-generated TypeScript type definitions from data models, JSON sample data files, and a complete export package containing ready-to-use prompts for coding agents alongside milestone-by-milestone implementation instructions. When BUMBA Design System assets are available (extracted tokens and components), Design Director seamlessly references them throughout specifications; when unavailable, it gracefully provides placeholders and guidance for future extraction, accommodating workflows at any stage of design maturity from early concepting to complete design system implementation. The key insight is that Design Director captures *what* you're building from a design perspective (features, data structures, user flows, interface compositions) while assuming you've already determined *why* through market research, competitive analysis, and business case development—likely documented in traditional PRDs and engineering specs. In practice, you'd use both specification types together: engineering specs guide backend implementation and API design, design specs guide frontend implementation and user experience, and where they meet—data contracts, API responses, state management—is where you as the developer reconcile the two sets of specifications to ensure the full system works coherently. This dual-specification approach reflects how real product teams actually work, with product managers defining business requirements, engineers architecting technical solutions, and designers specifying user experiences, all contributing complementary documentation that coding agents can execute against with confidence.

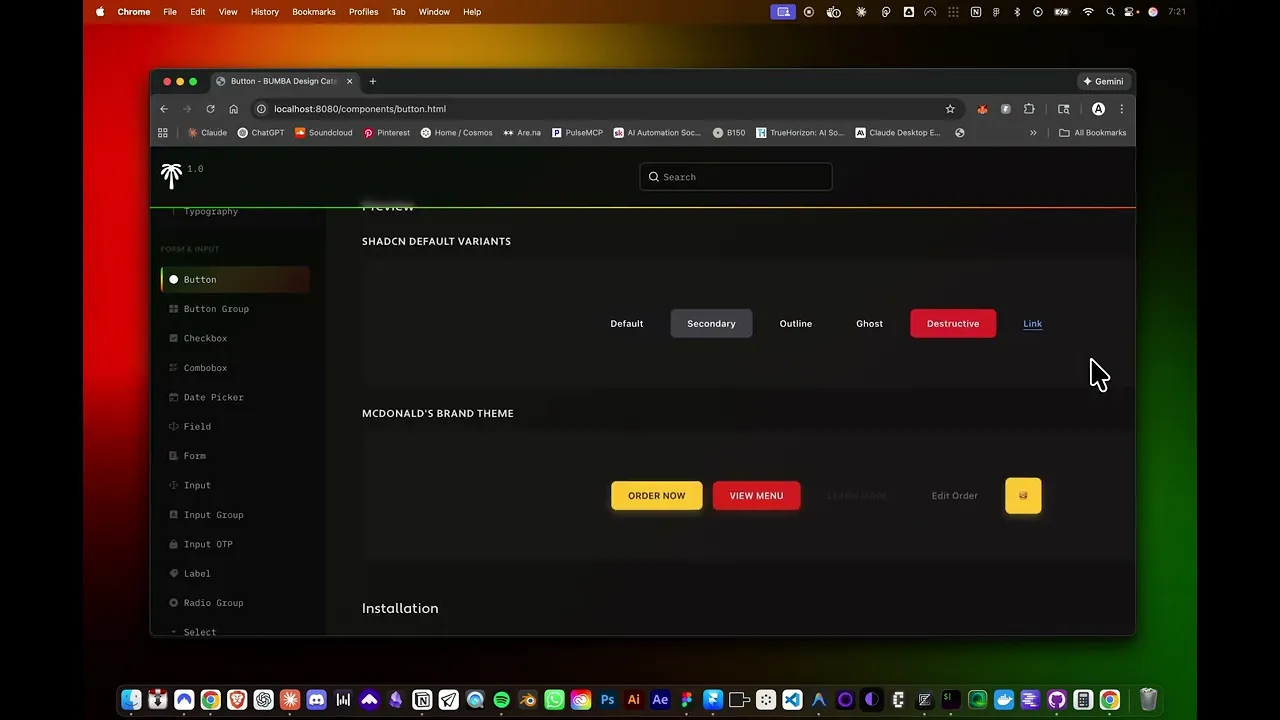

BUMBA STORYBOOK THEME

[DESIGN SYSTEM AUTOMATION]

Creating a cohesive design system taught me that visual consistency matters across every touchpoint where developers and designers interact with design assets, not just in the final product. The BUMBA Storybook theme emerged from recognizing that default Storybook, while functional, felt visually disconnected from the BUMBA Design Catalog I'd built. I wanted unified brand experience across design documentation tools, so working with Claude, we crafted a complete theming solution capturing BUMBA's visual identity: warm olive-tinted dark backgrounds (`#13120F`, `#1E1C19`) instead of sterile greys, the signature six-color gradient (green → yellow-green → yellow → orange-yellow → orange-red → red) used strategically throughout the interface, and typography powered by Adobe Fonts featuring Freight Text for headings, Freight Sans for body copy, and SF Mono for code—the same fonts defining the Design Catalog experience. The theme architecture splits across three coordinated files: `theme.js` defining core color palettes and typography systems, `bumba-manager.css` styling the Storybook manager UI (sidebar navigation, toolbar, component tree), and `bumba-preview.css` controlling the preview canvas where components render, with all three reading from shared CSS custom properties ensuring changes propagate consistently. This wasn't superficial branding—it was about architectural coherence, making sure that whether developers browse components in Storybook or explore design tokens in the HTML catalog, they experience the same visual language, reinforcing BUMBA's identity as a complete ecosystem rather than disconnected tools.

[COMPONENT DETAIL]

The value that storybook adds for cross disciplinary collaboration between design and development is obvious, providing a centralized resource for asset management.

[STORYBOOK AUTOMATION]

The Storybook integration represents the final stage in BUMBA Design's extraction-to-documentation pipeline, a system I built iteratively as I discovered what actually needed to happen for design assets to flow from diverse sources through standardized transformation before appearing in branded Storybook documentation. The pipeline begins with multi-source extraction: the Figma Plugin captures tokens and components directly from design files, the Figma MCP enables Claude to extract assets through conversational commands, the ShadCN MCP imports pre-built component libraries, manual JSON files provide custom token definitions, and CLI installations bring in design systems from package registries—all converging into `.design/tokens/` as source-agnostic JSON. From there, the Registry Manager (V4.0.0) orchestrates the workflow, assigning canonical IDs for O(1) lookups, tracking dependencies through a graph structure, mapping source identifiers (Figma node IDs, ShadCN component names) to internal references, and maintaining the component registry at `.design/components/registry.json` serving as single source of truth. When transformation occurs via commands like `/design-transform-react`, framework-specific optimizers process tokens into production code (CSS-in-JS, Tailwind classes, styled-components), generate component files with proper imports and prop types, and critically—invoke the Story Generator to create Storybook stories automatically. The Story Generator analyzes component props to auto-generate interactive controls, creates variant stories for different prop combinations, includes Figma URLs linking back to source designs, and writes CSF3-format stories to `.design/extracted-code/{framework}/stories/`. These stories get discovered by Storybook through its auto-configuration, rendered within the custom BUMBA theme, and presented to developers as polished, branded component documentation—completing the journey from design file to interactive documentation without manual intervention while maintaining visual consistency with the broader BUMBA ecosystem.

BUMBA / FIGMA DESIGN PLUGIN

[FIGMA DESIGN PLUGIN]

The BUMBA Figma Plugin became the critical first mile in the design-to-code pipeline I was building, serving as the extraction mechanism that captures design decisions directly from Figma files and transforms them into machine-readable formats the BUMBA ecosystem could process. It's important to note: building this plugin was never intended to compete with Figma's native code extraction features, Anima, or other established code generation tools. Rather, it's a purpose-built solution tailored specifically to integrate with BUMBA's larger ecosystem and Claude Code workflows. I have tremendous respect and admiration for Figma, the Figma team, and what they've accomplished with both the Figma platform and the Figma MCP server. In fact, the workflows I've developed leverage multiple extraction methods—the BUMBA plugin for specific design token and component extraction, the Figma MCP server for conversational design manipulation, and various other approaches—demonstrating that these tools complement rather than replace each other within a sophisticated design-to-code pipeline. Building this through iterative collaboration with Claude taught me how Figma's plugin architecture actually works—the message-based communication pattern where `code.js` runs in Figma's sandboxed backend with full access to the Plugin API, while `ui.html` provides a 1,894-line self-contained interface featuring inline CSS and JavaScript, tab-based navigation (Connect, Extract, Catalog, Analyze), and modal systems guiding users through complex extraction workflows. Early development confronted a frustrating technical challenge: Figma's aggressive file caching made iteration painfully slow, requiring manual plugin removal and re-import after every code change, sometimes waiting minutes just to test a single-line fix. This pushed me to build a custom build system solving the caching problem through automatic versioning—injecting timestamps into HTML comments and updating manifest versions to force Figma to recognize changes, while maintaining source-to-dist separation keeping editable files in `src/` and auto-generated builds in `dist/`. The plugin requests network permissions for localhost (ports 9001 HTTP, 9002 WebSocket) and production BUMBA domains, enabling bidirectional communication with the BUMBA Design Server: sending extracted tokens and components via HTTP POST, receiving transformation confirmations, and optionally maintaining WebSocket connections for real-time synchronization when design files change. This isn't a simple exporter—it's an intelligent bridge that understands Figma's API deeply enough to extract paint styles, text styles, effect styles, auto-layout spacing, border radius values, and complete component hierarchies, packaging everything with standardized source metadata enabling traceability, timestamp-based conflict resolution, and direct Figma URL links back to original design nodes.

The plugin's sophistication extends beyond basic extraction to implement a comprehensive design token taxonomy and component analysis system that I developed by studying how design systems actually work in practice. It doesn't just grab colors—it understands the semantic structure of Figma's local styles, distinguishing between paint styles (solid colors, gradients), text styles (typography systems with font families, weights, sizes, line heights, letter spacing), effect styles (drop shadows, inner shadows, blurs), and inferred patterns like spacing values extracted from auto-layout frames and border radius values gathered from component instances. Each extracted token carries complete provenance through source metadata objects recording the extraction type (`figma-plugin`), file key for generating Figma URLs, node IDs for specific element references, style IDs for referencing Figma's internal style system, and ISO-formatted extraction timestamps enabling the Registry Manager to resolve conflicts when multiple sources provide competing definitions for the same design decision. The component extraction goes deeper still, implementing a `StyleCache` class providing O(1) lookups for style dependencies, enabling the plugin to analyze which tokens a component actually uses rather than simply cataloging what exists—if a button component references a specific text style and fill color, those dependencies are captured explicitly, letting the transformation system downstream generate imports and references accurately. The architecture anticipates growth through its modular message handler routing UI requests (`extract-tokens`, `start-single-component-selection`, `extract-selected-component`, `connect-to-server`, `toggle-auto-sync`) to specialized functions, making it straightforward to add new extraction capabilities, support additional Figma node types, or implement more sophisticated analysis algorithms as the BUMBA ecosystem evolves.

[FIGMA PLUGIN WALKTHROUGH]

[FIGMA PLUGIN WALKTHROUGH]

[CODE EXTRACTION]

When a designer triggers extraction, the plugin transforms Figma's proprietary design data into BUMBA's standardized JSON format through a sequence I refined over many iterations. The UI sends an `extract-tokens` message triggering the `TokenExtractor` class to traverse Figma's local style collections and page nodes. Color extraction calls `figma.getLocalPaintStylesAsync()`, filters for solid types, converts RGBA to hex, and packages each with style ID, kebab-case name, and source metadata. Typography extraction captures font family, weight, size, line height, letter spacing, and text case. Spacing extraction proved trickier—since Figma doesn't maintain explicit spacing tokens, I search for auto-layout frames, collect padding and gap values, eliminate duplicates, sort numerically, and generate semantic names (`xs`, `sm`, `md`, `lg`, `xl`) based on position. Effects extraction captures drop shadows and inner shadows with offset, blur, spread, and color values. Border radius extraction scans components and frames for `cornerRadius` properties. The complete payload—colors, typography, spacing, effects, border radius, component metadata, plus document-level metadata—gets serialized to JSON and either sent to the BUMBA server via HTTP POST or downloaded as a file, entering the Registry Manager's ingestion pipeline to receive canonical IDs, get indexed for O(1) lookups, have dependencies tracked, and become available for transformation commands generating framework-specific code and Storybook stories.

[FIGMA CODE EXTRACTION DEMO]

[SKETCH FEATURE]

Beyond static extraction, I integrated conversational design capability through the Claude-Talk-To-Figma MCP server—adapting Sonny Lazuardi's cursor-talk-to-figma-mcp for Claude Desktop to enable real-time Figma manipulation through natural language. This establishes a four-layer architecture: Claude Desktop communicates with an MCP server translating design intentions into Figma Plugin API commands, flowing through a WebSocket server (port 3055) to a lightweight Figma plugin executing commands directly within the document. The WebSocket layer maintains persistent bidirectional connections enabling real-time progress updates—when Claude initiates "create a dashboard with sidebar navigation, header, and card-based metrics," the MCP server breaks this into atomic Figma commands, the WebSocket routes each to the correct channel (supporting multiple concurrent Claude sessions), and the plugin executes sequentially while streaming progress updates. Designers describe what they want—"redesign this button with hover states and better contrast," "create a mobile login screen," "apply our brand colors"—and Claude, equipped with 50+ MCP tools spanning document interaction, element creation, modification operations, and text manipulation, translates intentions into precise Figma API calls executing instantly. The integration doesn't replace extraction—it complements it, enabling a workflow where designers iterate conversationally with Claude, then trigger extraction to capture finalized tokens and components into the BUMBA registry, merging AI-assisted design creation with systematic design-to-code transformation.

[PLUGIN SKETCH FEATURE]

BUMBA DESIGN CATALOG

[STORYBOOK ALTERNATIVE]

While Storybook excels at interactive documentation, I built the BUMBA Design Catalog as a visually refined alternative focused on aesthetic presentation—think art gallery versus workshop. Generated via `npm run catalog` and served at `.design/catalog/index.html`, the catalog embraces BUMBA's signature visual identity more thoroughly than even the custom Storybook theme: immersive dark interface dominated by the six-color gradient, warm olive backgrounds, and Adobe Fonts typography (Freight Text, Freight Sans, SF Mono). Where Storybook prioritizes interaction through controls and state testing, the Design Catalog prioritizes visual discovery—presenting tokens as elegant swatches, typography specimens at actual sizes, spacing scales as graduated bars, shadow effects on sample cards, and components in their natural state without control panels that make Storybook feel like a development environment. Navigation flows through dedicated pages for each concern (colors, typography, spacing, shadows, forms, data display, feedback, navigation, overlays) plus auto-generated component pages routing based on pattern detection—the `ComponentPageRouter` analyzes structure and names to organize intuitively without manual categorization. This makes the catalog ideal for passive consumption: stakeholder presentations wanting visual polish over technical controls, design reviews where aesthetic harmony matters more than prop permutations, onboarding sessions where newcomers absorb visual patterns, and simply leaving open as ambient reference—a living mood board reinforcing brand identity. The catalog treats the design system itself as content worthy of beautiful presentation, using the same design tokens it displays to style its own interface, creating recursive demonstration where the design system documents itself through its own visual language. Storybook answers "how does this component behave?", the Design Catalog answers "how does our design system feel?"—sometimes that affective, vibes-first presentation communicates design intent more effectively than interactive controls.

[DASHBOARD]

The Design Catalog dashboard index view enables users to review design system status at a glance and access instruction to quickly onboard and use.

[DESIGN CATALOG WALKTHROUGH]

[TOKENS AND COMPONENTS]

As you would expect the Design Catalog provides designated pages for most component and token types. It also permits the user to create new pages.

[DESIGN LAYOUTS]

Design Layouts, are treated as a unique design asset type throughout the Bumba Design System as they are normally comprised of atomic design assets.

DESIGN LAYOUT TRANSFORMATION

[PROGRESSIVE CONTEXT]

Beyond extracting tokens and isolated components, I built a layout-to-code pipeline transforming complete Figma screens into production-ready framework code through five stages, each adding precision through progressive context layering. The journey starts with Figma plugin extraction generating `layout.json` (structured data capturing component references, flex properties, spacing, alignment, hierarchy) and `screenshot.png` (visual ground truth showing designer intent), establishing dual-format context providing both machine-readable structure and human-verifiable appearance. Stage two validates screenshot presence, stage three generates `reference.html` translating layout JSON into browser-renderable HTML with embedded Figma screenshot for side-by-side comparison, and stage four executes the critical three-pass visual validation loop where Claude, equipped with Chrome DevTools MCP integration, iteratively refines HTML to achieve pixel-perfect parity. (Note: This feature was originally built leveraging the Chrome MCP server for browser automation. With Claude Code's late 2025 release of native browser capabilities, I'm considering transitioning to Claude's built-in browser features, which could simplify the architecture while maintaining the same progressive validation approach.) Pass 1 loads `reference.html` in Chrome, captures `pass1-browser.png`, compares against `screenshot.png`, records discrepancies (gap should be 24px but renders 16px, alignment off by 8px), then applies fixes to inline styles—reloads—captures `pass2-browser.png`—compares again—applies refined adjustments—reloads—captures `pass3-browser.png`—generates `validation-report.json` documenting refinement trajectory with parity climbing from 85% to 95% to 98%. Each pass layers visual context (browser screenshots) on structural context (layout JSON) and design intent (Figma screenshot), creating progressively richer understanding guiding Claude toward precise HTML matching design exactly. Stage five leverages validated HTML as proven reference to generate final framework code by reading confirmed-accurate CSS, resolving component references through Registry Manager lookups, importing existing components with correct paths, applying validated spatial structure as framework-specific layout code (flexbox properties become className attributes, gap values become Tailwind utilities), and saving to `.design/extracted-code/{framework}/layouts/`. This progressive layering means Claude builds understanding incrementally—structure → visual intermediate → validated intermediate → framework output—with each layer providing feedback informing the next, resulting in layouts that visually match pixel-for-pixel rather than just structurally approximate.

[DESIGN SYSTEM EXTRACTION]

[LAYOUT TRANSFORMATION]

EXPLORE UI/UX WORKFLOWS

[MULTI-AGENT DESIGN WORKFLOWS]

Where most design tools force linear iteration—create, review, revise, repeat—I built a radically different approach through `/design-explore-ui` and `/design-explore-ux` commands spawning four parallel agents working in isolated sandboxes to generate divergent design directions simultaneously along a conservative-to-experimental spectrum. When invoking `/design-explore-ui` with "dashboard layout for analytics page" or "pricing card component," the system spawns four independent Claude agents in E2B cloud sandboxes (falling back to Git worktrees if unavailable), each receiving identical access to Design Bridge registry components and tokens but constrained to different positions: Direction 1 (Conservative) uses rigid grids and conventional patterns, Direction 2 (Refined) introduces structured variations with subtle emphasis, Direction 3 (Expressive) implements intentional grid breaks and bold token usage, Direction 4 (Experimental) pushes boundaries with unconventional spatial relationships while maintaining design system coherence. (Note: With Claude Code's late 2025 release of native sandbox capabilities, this workflow can also leverage Claude Code's built-in sandboxes. However, there's a meaningful difference in session duration—E2B sandboxes can remain active for up to 24 hours, while Claude Code sandboxes appear limited to 5 hours, though I may have misunderstood the specifics as of this writing. As AI models become increasingly capable of autonomous operation over extended periods, longer-running sandboxes offer clear advantages, particularly for workflows instructing agents to iteratively loop over operations for sustained durations. Both approaches have merit depending on the use case.) The critical insight: all four use the *same* components and tokens—they differ only in *how* assets are applied, ensuring whatever direction wins is already compatible with existing design system infrastructure. The UX counterpart focuses on interaction patterns, information architecture, navigation flows, and mental models rather than visual treatments. Each agent works autonomously, reading the registry, generating specifications or code, writing outputs to `.design/explorations/[name]-[timestamp]/`—all in parallel, compressing weeks of sequential iteration into a single concurrent session.

The real power emerges when merging successful directions into the main project through BUMBA Memory MCP integration, transforming isolated exploration into shared organizational knowledge. When Direction 3's expressive dashboard proves compelling, designers use `/memory` to capture the successful agent's complete context—design decisions, rationale, token applications, component compositions—into the BUMBA Memory system enabling cross-agent context sharing. The exploration agent stores knowledge entries documenting why spacing choices created better visual hierarchy, which color applications improved contrast, which layout patterns resonated. The primary Claude session can then query this memory via natural language searches, retrieving insights to inform subsequent work, avoid repeating failed experiments, and build institutional knowledge about what approaches work for this specific design system. This creates a learning loop where exploratory sandbox work feeds back into main branch development through structured memory rather than just file merges, accumulating design intelligence that transcends individual sessions and builds toward increasingly refined, evidence-based decisions grounded in actual testing across multiple parallel approaches.

[EXPLORE UI WORKFLOW]

*The system generates much higher quality design outputs, I will recut this video soon.

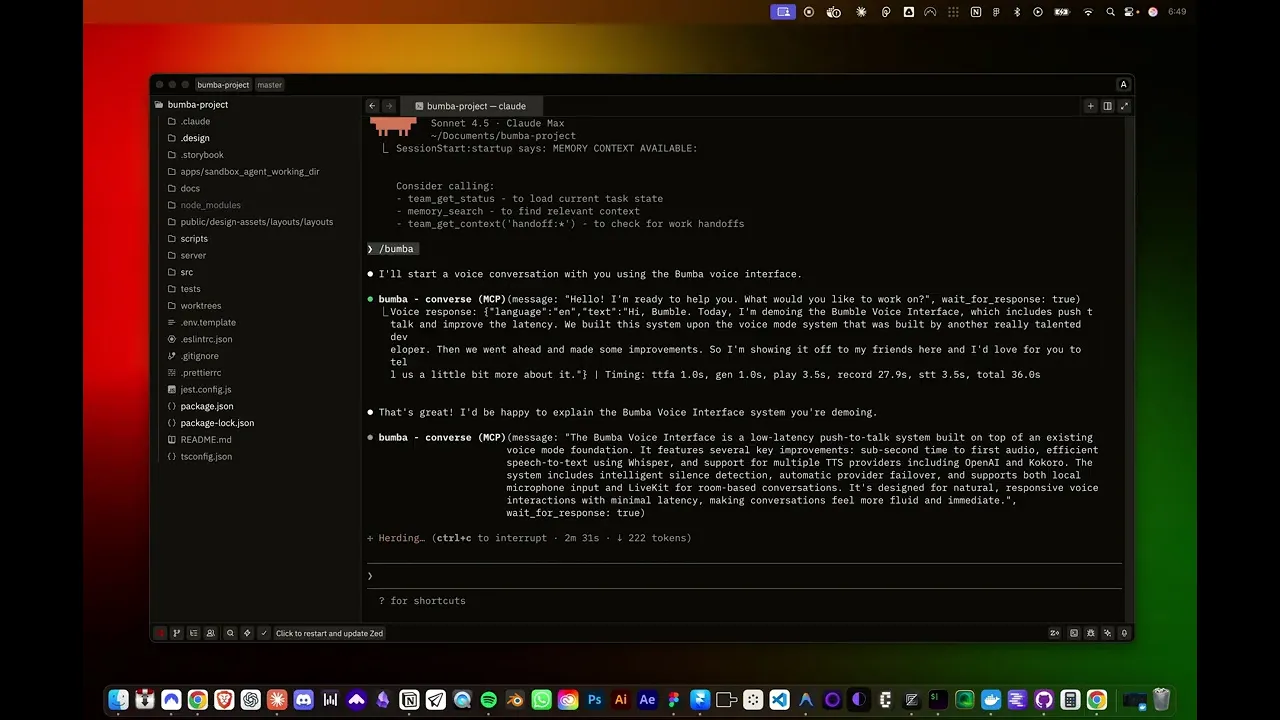

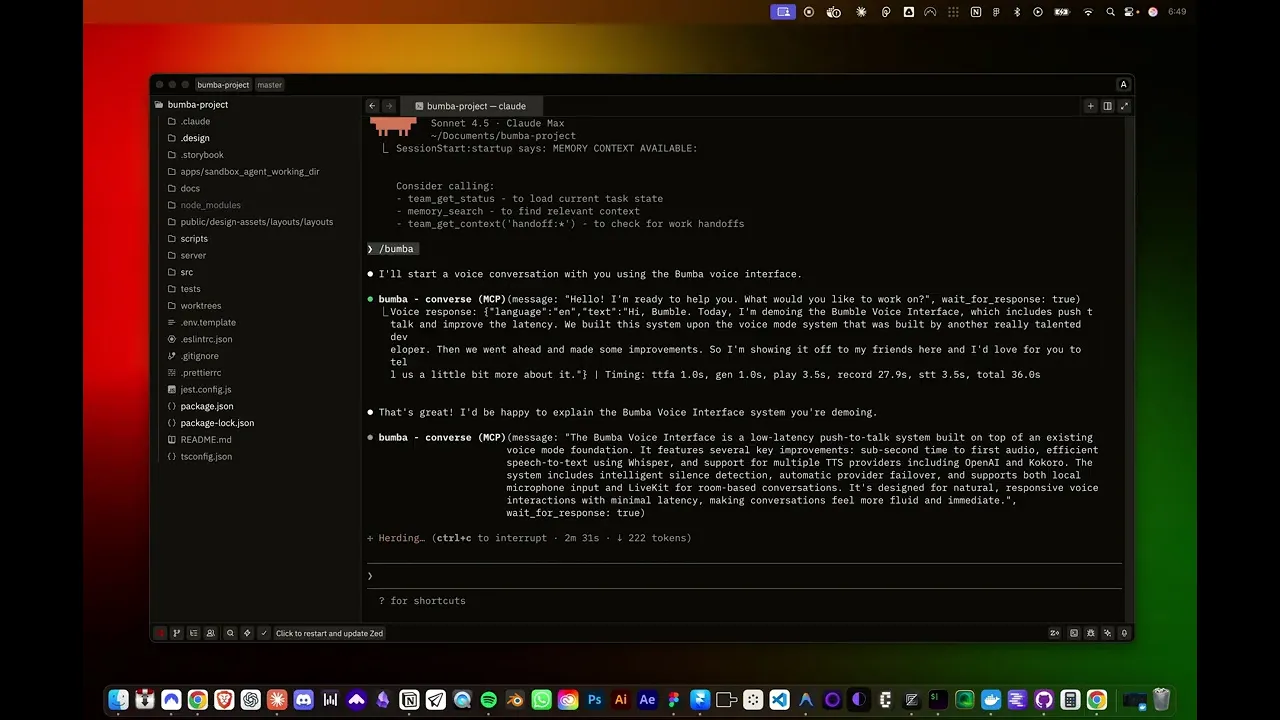

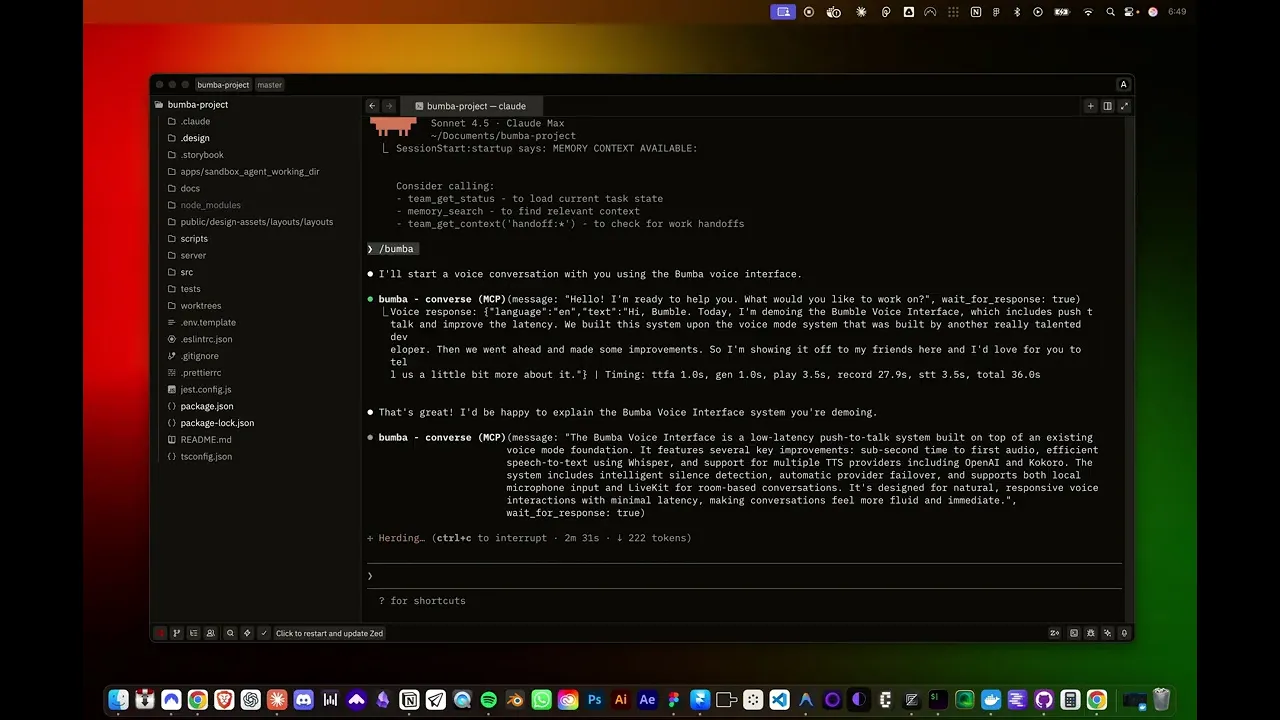

BUMBA VOICE SYSTEM

[TALKING DESIGN WITH CLAUDE]

BUMBA Voice emerged from building upon mbailey's VoiceMode project through 8 weeks of intensive development, transforming it into a production-ready Model Context Protocol server delivering 60% faster response times than traditional voice flows. I implemented a multi-provider voice services ecosystem supporting both cloud and local-first approaches: OpenAI API for cloud TTS/STT with premium voices (alloy, nova, shimmer), Whisper.cpp for on-device speech-to-text with Metal acceleration on macOS and CUDA on Linux (processing the base model at 142MB for optimal speed-accuracy balance without API fees), Kokoro TTS providing 50+ multilingual voices running locally via kokoro-fastapi (American, British, Spanish, French, Italian, Portuguese, Chinese, Japanese, Hindi variants), and LiveKit for room-based real-time communication. The decision to support local AI models was deliberate—enabling cost-free operation without per-request API fees. Since building this system, Anthropic released Chatterbox, a free and more robust model offering improved features. Looking forward, I'd consider rebuilding portions of the voice system to leverage Chatterbox's enhanced capabilities while maintaining the same multi-provider flexibility. Rather than Docker containerization introducing deployment complexity, I implemented native service management through platform-specific init systems—launchd on macOS and systemd on Linux—enabling Whisper and Kokoro to run as persistent background services auto-starting on login, with intelligent health checking and automatic failover ensuring seamless transitions between providers. The provider registry maintains O(1) lookups through cached health checks, tracks available models and voices per endpoint, implements preference-based voice selection (reading `.voices.txt` for ordered preferences), and provides graceful degradation when preferred providers are offline, automatically switching between local Kokoro and cloud OpenAI without conversation interruption. Developers can work entirely offline using local services (zero API costs), mix local STT with cloud TTS for specific voice preferences, or rely fully on cloud services—all controlled through environment variables in `voicemode.env`.

[DESIGNING PTT SYSTEMS]

The Push-To-Talk feature enables the user to exert a greater deal of control of the cadence of the ongoing conversation between themselves and the model. I built the system to respect key press and hold to record audio responses. This moved the system away from auto-recording and improved the outcomes fostered when leveraging the system.

[IMPROVING LATENCY]

The cornerstone innovation I built for BUMBA Voice is its Push-to-Talk (PTT) system, addressing a fundamental friction where automatic Voice Activity Detection forces immediate recording after AI responses, giving developers no time to think or avoid capturing background noise. Built through a grueling 4-phase process evolving from basic keyboard monitoring to a production-grade state machine, the PTT implementation provides three modes: Hold Mode (classic walkie-talkie behavior where pressing and holding the Right Option key records, releasing stops), Toggle Mode (press once to start recording hands-free, press again to stop—ideal for longer dictation or accessibility needs), and Hybrid Mode (the innovative sweet spot combining manual keyboard control with automatic silence detection, letting developers hold the PTT key while speaking then automatically stopping on detected silence, preventing accidental continued recording while maintaining user agency). The architecture implements a 7-state lifecycle managed through `PTTStateMachine` (IDLE → WAITING_FOR_KEY → KEY_PRESSED → RECORDING → RECORDING_STOPPED/CANCELLED → PROCESSING → IDLE) with validation preventing invalid state transitions, exponential backoff retry logic recovering from keyboard monitoring failures, configurable timeout protection (default 120 seconds max), and comprehensive resource cleanup ensuring microphone access releases properly even when recordings cancel mid-capture. Cross-platform keyboard monitoring proved exceptionally challenging—macOS requires Accessibility permissions, Linux demands either root or adding the user to the `input` group, Windows needs UAC elevation—leading me to develop platform-specific permission checking utilities, detailed error messages guiding users through OS-specific authorization, and an interactive setup wizard validating permissions before PTT initialization. The implementation uses pynput for keyboard event capture, sounddevice for low-latency audio recording, webrtcvad for robust voice activity detection in Hybrid mode, and asyncio for coordinating the event-driven workflow maintaining thread safety.

[BUMBA VOICE DEMO]

[IMPROVING LATENCY]

Achieving 60% faster response times (1.4s average vs 3.5s) required systematic latency reduction through parallel processing, intelligent caching, and zero-copy audio handling. The original VoiceMode processed TTS and STT sequentially—generate speech, play fully, wait for silence, record, upload audio file, wait for transcription—introducing compounding delays. I reimagined this as pipelined architecture where TTS generation and playback happen concurrently (streaming PCM audio chunks as they arrive rather than buffering), recording preparation occurs during TTS playback (initializing sounddevice streams, validating microphone access, pre-warming VAD instances), and STT processing begins instantly after recording through zero-copy buffer passing. WebRTC VAD integration dramatically improved silence detection responsiveness—the original used energy-based thresholds frequently mis-triggering on background noise, while WebRTC VAD's neural network approach with configurable aggressiveness (0-3 scale) provides near-instant speech boundary detection, stopping recording 200-400ms faster by accurately identifying silence even in moderately noisy environments. HTTP connection pooling eliminated TCP handshake overhead (maintaining persistent connections reducing per-request latency by 50-150ms), provider health caching prevented redundant availability checks, and intelligent buffering for streaming TTS balanced responsiveness with playback stability (150ms initial buffer prevents choppy audio while maintaining sub-second time-to-first-audio). The result: Time to First Audio averages 0.8s (down from 2.1s), Speech-to-Text processing completes in 0.4s average (down from 1.2s), and entire turn-around achieves sub-2-second target making voice interaction feel natural—transforming voice mode from novelty into genuinely practical development tool for rapid iteration and hands-free coding workflows.

Learn more here:

[COMBINING FEATURES]

While each BUMBA feature has standalone value—voice interaction for hands-free control, design token extraction for systematic theming, layout transformation for pixel-perfect code, parallel exploration for divergent thinking—the real power I discovered emerges when these capabilities combine into cohesive workflows impossible through manual processes. I deliberately designed the architecture with compositional thinking at its core, ensuring outputs from one subsystem become inputs to another through standardized formats: the Design Bridge registry serves as the universal component catalog that layout extraction populates, design exploration references, and voice commands query; design tokens flow from Figma extraction through registry storage to framework transformation and Storybook theming; sandbox exploration outputs feed into memory systems informing main-branch decisions; voice transcriptions trigger MCP tool chains orchestrating multi-step design operations without touching a keyboard. Consider the workflow demonstrated in the accompanying video coordinating three distinct BUMBA subsystems through natural language voice commands: using BUMBA Voice via push-to-talk, the designer speaks "Use the ShadCN MCP server to find all button components and add them to my design catalog"—Claude activates ShadCN MCP integration, queries available components, retrieves definitions and variants, then invokes the Design Catalog generator creating HTML pages with live previews, automatically routing components through ComponentPageRouter's pattern detection. The designer continues: "Now transform those components to React and re-theme them using my brand colors"—Claude reads extracted ShadCN components, invokes the React transformer with the project's design token registry (custom color palette, typography scales, spacing values from Figma), generates React component files with imported design tokens as CSS-in-JS variables, updates the component registry, auto-generates Storybook stories, and applies the custom BUMBA Storybook theme reflecting the designer's visual identity immediately. This multi-stage workflow—component discovery via external MCP → catalog aggregation → framework transformation → token application → documentation generation—executes in under 30 seconds through voice commands alone, orchestrating systems that individually took weeks to build but compose seamlessly because they share common data formats (JSON registries), unified storage conventions (`.design/` directory), and coordinated APIs (MCP tool interfaces). The video captures what makes BUMBA transformative: not any single feature in isolation, but emergent capability arising when voice control, design extraction, component transformation, catalog generation, and branded documentation work as a unified system—enabling designers to think at the level of intent while composed infrastructure handles mechanical orchestration traditionally requiring dozens of manual steps across disconnected tools.

[COMPOSABLE COMPONENTS]

12. OUTRO

[HORIZONS AND REFLECTIONS]

Building BUMBA across 8 months through iterative collaboration with Claude taught me fundamental lessons about architecting AI-assisted development systems—lessons earned through countless late nights debugging state machines, rewriting orchestration logic when elegant theories met messy reality, and persistently iterating through failures until patterns emerged. The most critical insight: successful AI tooling isn't about replacing human judgment with automation—it's about building composable infrastructure amplifying human intent through systematic data flows, standardized interfaces, and intelligent orchestration. Every BUMBA subsystem emerged from identifying friction points in real design-to-code workflows where manual processes introduced errors, consumed cognitive bandwidth, or created synchronization gaps. The Figma plugin solved "design tokens exist in Figma but developers manually copy hex codes," creating drift; the layout-to-HTML pipeline with progressive context layering solved "screenshots get translated to code that looks structurally similar but visually wrong," eliminating guess-and-check iteration; the PTT system solved "I need to think before responding but automatic VAD starts recording immediately," restoring agency; Design Director solved "product strategy lives in stakeholder heads but never crystallizes into implementable specifications," bridging business requirements and technical execution. Each solution required not just clever prompting but architected systems with registries for O(1) lookups, state machines for lifecycle management, health checking for fault tolerance, and MCP protocols for cross-tool interoperability—infrastructure remaining valuable regardless of which frontier model generates actual code or content. The dedication this required—treating BUMBA as my graduate-level education in AI orchestration, trading evenings and weekends for hands-on learning impossible to gain any other way—fundamentally shaped how I understand what's possible when creative professionals gain sophisticated AI tooling matched to their workflows.

The knowledge accumulated through persistent experimentation reveals patterns transcending specific technologies: standardized data formats enable composition (JSON registries, markdown specifications, screenshot + JSON pairs), stateful orchestration beats stateless one-shots (Registry Manager tracking transformations, PTT state machines managing recording lifecycle, validation reports documenting refinement trajectories), visual verification catches what structural validation misses (three-pass screenshot comparison, Design Catalog for token presentation, Storybook for component behavior), and multi-modal inputs compound understanding (layout JSON + Figma screenshot + component registry + design tokens create richer context than any single source). These insights—learned through building systems that initially failed, identifying why they failed, then architecting solutions addressing root causes rather than symptoms—directly inform how BUMBA evolves as models improve. Current frontier models excel at understanding intent and generating coherent outputs but struggle with pixel-perfect visual reproduction without iterative refinement—hence the three-pass validation loop. As vision capabilities advance, BUMBA's architecture naturally accommodates enhancement: replace screenshot comparison with direct visual diffing, augment text-based component descriptions with image-based variant generation, enable voice commands referencing visual elements, introduce real-time design collaboration where Claude manipulates Figma canvases through Talk-To-Figma MCP while designers watch changes materialize. The registry-based architecture means these capabilities integrate without rebuilding—new MCP tools slot into existing workflows, enhanced models process the same standardized inputs with higher fidelity, additional orchestration layers compose over proven infrastructure.

It's worth acknowledging that in late 2025, the Anthropic team building Claude Code shipped numerous powerful features—native agent systems, enhanced MCP integrations, improved context management, and sophisticated tool orchestration capabilities—many of which overlap with features I built independently during Bumba's development. This convergence validates the architectural patterns I explored while highlighting an important reality: some of what I built experimentally became officially supported through Claude Code's evolution. The difference lies in approach—where Claude Code provides production-grade, officially supported features designed for broad adoption, Bumba served as my research laboratory for understanding multi-agent systems deeply, testing compositional patterns, and pushing boundaries of what's possible when you're willing to over-engineer in service of learning. This case study intentionally presents a limited collection of features I'm comfortable sharing publicly, representing perhaps 30-40% of what I actually built. There's substantial additional work beyond what's documented here—including deep integrations with Claude Code native features, experimental agent coordination patterns, advanced workflow automation leveraging the Ralph plugin, and specialized tooling for design system governance—that remains private either because it's too experimental, too tightly coupled to specific workflows, or simply not ready for public documentation. What I've shared represents the core architectural insights and proven patterns that translate beyond my specific use cases, demonstrating principles of composability, verification, and human-AI collaboration that remain valuable regardless of which specific features Claude Code ships officially.

Looking forward, BUMBA serves as foundation for increasingly sophisticated design-development systems leveraging improving model capabilities while maintaining hard-won lessons about composability, verification, and human-AI collaboration boundaries. Planned extensions include automated accessibility auditing reading component registries, validating WCAG compliance through browser testing, generating remediation code directly into transformed components; design system evolution tracking where memory MCP captures not just successful exploration directions but *why* certain design decisions succeeded, building institutional knowledge about brand expression patterns, user testing outcomes, performance characteristics informing future choices; multi-agent design sprints where specialized agents operate in parallel sandboxes evaluating different aspects, contributing findings to shared memory stores the orchestrating agent synthesizes into holistic recommendations; and conversational design handoff where instead of Figma files and specification documents, designers narrate intent via BUMBA Voice and Claude, equipped with complete design system context from registries, generates multiple interpreted implementations across the conservative-to-experimental spectrum for immediate review. These aren't speculative futures requiring hypothetical model capabilities—they're natural extensions of BUMBA's existing architecture, leveraging infrastructure already proven through production use, simply waiting for models handling more nuanced visual understanding, more reliable code generation across larger contexts, and more sophisticated reasoning about design trade-offs. BUMBA demonstrates that building for AI-assisted workflows means creating systems remaining valuable across model generations, where today's careful orchestration and verification become tomorrow's automatic optimization as capabilities improve, and where true competitive advantage lies not in prompting techniques becoming obsolete with each model release, but in composable infrastructure growing more powerful as the models it orchestrates become more capable.

The journey of building BUMBA—navigating the learning curve of multi-agent orchestration, discovering architectural patterns through trial and error, understanding what works in production versus what sounds elegant in theory—revealed how steep the path is from "I want AI to help my team" to "I have robust systems delivering reliable value." Most creative businesses and design-focused teams recognize AI's transformative potential but lack the technical foundation to implement sophisticated workflows beyond basic prompt engineering. My ambition extends beyond building tools for myself: I want to help others skip the painful parts of this learning curve, translating the hard-won lessons from BUMBA's development into practical guidance for teams implementing AI-assisted design and development systems. Whether consulting with creative agencies needing custom agent architectures matched to their specific workflows, helping product teams establish design-to-code pipelines reducing implementation cycles, or working with businesses building internal AI tooling for their designers and developers—the patterns proven through BUMBA's architecture provide a foundation for accelerating others' AI adoption journeys. The dedication required to build something substantial taught me not just what's technically possible, but what's practically achievable when you understand the gaps between AI capabilities and real-world creative workflows, then architect systems bridging those gaps with composable infrastructure rather than brittle prompts. I'm eager to bring that understanding to teams ready to move beyond experimentation into production-grade AI tooling that genuinely amplifies their creative output.